21 Ways to Improve Data Quality in Your Predictive Analytics Strategy

By: Matt Angerer

The early part of my IT career was a whirlwind of adjusting to the real-world and attempting to understand the evolution of enterprise software. It is often said that history can repeat itself if you don’t learn from the past. This statement made a lasting impression on my psychology. For that reason, I would often “interview” the more seasoned IT folks I worked with in my early days as a Systems Analyst at a global consumer products company. Of course, these weren’t formal interviews – rather, discussions about the way things “used to be” in the late 70s, 80s, and 90s. [pullquote align=”right” cite=”” link=”” color=”#E6E7E8″ class=”” size=””]”You can learn a lot about the quality of your test cases from the new predictive analytics engine from HPE.”[/pullquote]

By 2002, the world as I knew it was changing. After 9/11, many of my closest friends were deployed to Iraq for the initial surge into Baghdad. Just graduating from Penn State, I decided to enter into the world of IT consulting with a boutique firm that specialized in helping the Department of Defense, including the U.S. Army and the Navy. I figured if I wasn’t on the battlefield, I could help the cause state-side by assisting with the implementation of Manugistics (now JDA) and SAP for the Defense Logistics Agency. This is where I received my first taste of “Big Data” and how predictive analytics strategy can be used to improve the accuracy of shipments to our warfighters around the world. Manugistics was a demand planning tool that allowed the DoD to predict future needs based on historical data. Sounds simple in theory, but it’s very complicated when you analyze the algorithms and variables used to make those predictions.

Admittedly, I was green and naïve at this time. Even though I was fresh out of college with over 10 years of computing experience already, I never grasped the complexity of enterprise software and the enormous power of how historical data can predict patterns and drive buying decisions until joining the Business Systems Modernization (BSM) project for the Defense Logistics Agency. What I learned on this project forever changed the way I looked at how transactional data was collected and how it could be used to give our military new advantages.

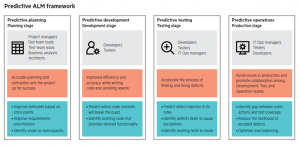

The same concepts of predictive planning are now going mainstream with modern software development teams. HPE is leading the charge with their predictive ALM framework (see image). Organizations that leverage HPE Quality Center or ALM today are sitting on a goldmine of data that will feed this new predictive framework, allowing teams to improve estimates based on story points, improve requirement prioritization, and identify under or overcapacity resources. Additionally, the algorithms built into the predictive ALM framework will help predict which code commits will break the build, and identify existing code that provides desired functionality. Your QA team will also be able to predict defect injection and fix rates, identify defects likely to cause escalations, and identify existing tests to re-use. Meanwhile, your operations team is able to better avoid issues in production and promote collaboration among Development, Test, and Operations.

Sounds too good to be true, right? The truth of the matter is that the framework and concept of predictive analytics hinges on one very important concept: data quality. The fundamental truths of computing that we all learned early-on of “Garbage In = Garbage Out” still apply today and will never change. These are fundamental truths that need to be respected and dealt with accordingly. In this article, we’re going to provide you with some tips on how to prepare your ALM and Quality Center projects for the future of predictive analytics strategy. Our RPALM methodology was designed to assist organizations with the pre-planning and data clean-up often required to use the predictive analytics algorithms available in the new ALM framework releasing in Q3 and Q4 of 2016.

Contact us today for special pricing on our 2-week RPALM data quality assessment and improvement inspection. An HPE ExpertOne certified Architect from ResultsPositive will be assigned to thoroughly evaluate your ALM or Quality Center landscape. We use a 21-point checklist to ensure the overall data quality in each of your ALM and Quality Center Projects.

The RPALM 21-point checklist includes the following:

- Releases

– We run analysis to make sure all of your ALM assets (e.g., requirements, tests, test instances, and defects) are included in the same project as the releases they are assigned to. This is an important part of ensuring the new algorithms pick up on every potential trend and/or anomaly. If we notice assets out-of-place, we’ll re-create the same project as a given release.

- Requirements

– We double-check every ALM project to ensure that you’re leveraging the requirements tree – this is very important.

– We also analyze your application under test (AUT) and compare it against your requirement tree structure. Your requirement tree structure should mirror your software product. If you have distinct modules within your product (e.g., SD, MM, CRM) – these should be represented as “groups” with requirements rolling up under them. We can help you ensure that your tree structure is logical and ready for the new algorithms.

– We will review requirements, help you include rich-text descriptions, and upload relevant attachments. Doing so will help the new algorithms detect relevancy and help produce more accurate outcomes.

– We will analyze every parent and child requirement to ensure traceability is enforced. The goal should always be 100% traceability. We believe in the motto of “no requirement or test case shall be left behind.” All child requirements should have a parent and test cases should have a requirement to cover. If a test case doesn’t cover a requirement, we call into question the validity of the test case immediately. This creates efficiency and drives the optimal use of HPE ALM.

– We analyze all of the requirements to evaluate whether defects are linked appropriately. Many clients will use the Manual Runner or HPE Sprinter to log defects against design steps of a test run. That is fine and we encourage this approach. However, in order for an organization to truly understand the problematic areas of a software application under test, we take it a step further by manually tracing defects to specific requirements. The new algorithms will look at this relationship closely to reveal problematic areas in your application where regression testing should increase.

- Test Plans

– Every test case in your test plan needs to cover at least one requirement, period. We closely analyze all of the test cases in your test plan to ensure this is the case. If we notice large gaps, we facilitate a requirements tracing workshop for your organization to get all functional stakeholders on the same page. Sometimes it’s not easy to quickly identify what requirement a particular test case is truly covering. It’s also not easy to identify all of the touch points. This is where subject matter expertise (SME) within your organization comes into play. But, guess what? You need to bring the necessary brains to the table for a collaborative discussion. This requires facilitation. RPALM is our methodology that allows you to transfer this responsibility to us and bring together the right people throughout the organization.

– Your tests should also be linked to defects. We know there is an indirect relationship to a test case in the test plan when you link a defect to a design step while manually executing the script. However, in order to take full use of the new algorithms for predictive analytics strategy – we help you take it one step further. Doing so will give you better predictive insight into what test cases are truly effective in uncovering defects. You can learn a lot about the quality of your test cases from the new predictive analytics engine from HPE too. Just because you have X number of test cases and “coverage” in place, doesn’t mean that the quality of those test cases is any good. The quality of a test case hinges on its ability to explore branches of an application.

- Test Runs

– We help you review your test runs to ensure that they’re being captured accurately. We also work with your QA Lead and Testers to teach them best practices around executing a manual run with either the Manual Runner or HPE Sprinter. We review the importance of avoiding multi-tasking while executing a test case, encouraging focus on the AUT and not on the instant message that popped up, or the email that their boss just sent. In order to properly capture the true amount of time it takes to execute a test case from start to finish, the QA Analyst needs to keep their eye on the prize. If by chance they do need to step away from their workstation, there is a feature in ALM to stop the execution run and pick back up on it when they return. We show your team how to do this in order to ensure the highest data quality.

- Defects

– We closely analyze how your organization is using the built-in workflow of either Quality Center or ALM and ensure there are proper procedures in place for what fields need to be populated vs. what does not. Standard templates are provided that your team can use to enforce policy and procedure amongst your development and testing teams.

– We review all user defined fields at the project-level across your ALM landscape and double-confirm with the project sponsor as to whether they are required or not. If necessary, we’ll work out a plan to sunset the fields and archive the data. Any truly essential fields are marked as mandatory.

Third Party Tool Integrations

Many organizations using ALM today leverage the built-in power of Application Lifecycle Intelligence (ALI) to integrate with 3rd party tools like Subversion and TFS. Perhaps your organization is also using tools like TaskTop to integrate with incident tracking systems like ServiceNow or Service Anywhere. We closely analyze the integration points and the data between both systems to help you understand how the ALM Predictive Framework’s algorithms will assess information like code commits (via ALI) or incidents vs. defects.

Inquire today about RPALM and how we can help your organization prepare for the next wave of predictive analytic computing. As we know, history often repeats itself unless we’re careful. Your organization is sitting on a goldmine of ALM and Quality Center project data that can be cleaned up and effectively leveraged by the new ALM Predictive Analytics algorithms to help you avoid many of the pitfalls the led to previously failed IT projects and cost overruns. By leveraging historical data and closely scrutinizing our failures, we can more intelligently prepare for the future of software delivery from a tools, people, and process perspective.

Subscribe for the latest RP Blog Updates: