Risk-Based Quality Assurance and ETL

By: Matt Angerer

Contrary to the opinion of some, data warehouses are not going anywhere anytime soon. In fact, the latest IDC research reveals that the big data technology and services market is set to enjoy a compound annual growth rate of almost 27 percent. Within the next three years, the industry as a whole is projected to have a value of more than $41 billion.

Clearly, this is an area that businesses simply cannot ignore as they attempt to organize and utilize a continual stream of massive data. With proper handling, Big Data can identify emerging trends and patterns, allowing businesses in all industries to make significant operational improvements that can boost their bottom lines. The amount of data amassed in your test management repository alone helps you to make intelligent decisions, otherwise known as predictive analysis. With risk-based quality assurance and testing, you can take into account failure probability based on historical data.

Using Historical Data to Your Advantage

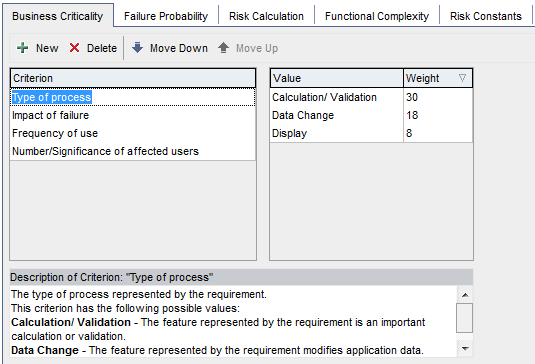

Risk-based quality assurance and testing has primarily focused on historical data and qualitative input from subject matter experts. With Risk-based Quality Management in HP Quality Center, you can specify the business criticality of a requirement across 4 out-of-the box criterion, including: Type of Process, Impact of Failure, Frequency of Use, and Number or Significance of Affected Users. Since everything should start with your requirements, this gives your team the opportunity to really think about how you would like to ‘weigh’ the values of each criterion.

For instance, the “type of process” when defining your Risk-Based Quality Management policy could be a calculation/validation, data change, or perhaps a display. The impact of failure could have legal ramifications or produce low quality data. Other criterion when defining business criticality include the frequency of use and the number of users impacted by a requirement, each with corresponding values (e.g., Very Often, Often, Rate) and Weights as depicted below.

Another factor to consider is Failure Probability of a requirement. What kind of change are you implementing? Is it a new feature or a change to an existing one? Also, how mature is the software that you’re making changes to and how many screens will the change affect? By taking into account the functional complexity of each requirement, HP Quality Center is able to automatically perform the risk calculation for you.

The recommendation produced by HP Quality Center helps you deploy testing resources in the most efficient and effective manner. As time crunches occur during the end of a project or before a monthly service pack, knowing what areas of your software are most likely to be impacted as a result of a change is invaluable information. After all, the goal is to ship high quality software – but in order to do that, you need to be testing the right areas of the software.

The Challenges of ETL

If we shift gears a little bit, I would like to shed light on a topic that closely relates to Big Data and predictive analysis, otherwise known as ETL or Extraction, Transformation, and Loading. ETL is increasingly difficult considering the complexity of most business environments and systems in production. ETL processing and testing experiences a number of significant hurdles, including the lack of user interface that is common in traditional application testing. Furthermore, there is the issue of dealing with the sheer volume of data.

The volume of data can easily extend to millions of daily transactions. Making matters even more complicated is that such data stems from multiple sources. When you add in source systems that are continually changing, incomplete data, and exceptions, it becomes easy to see how challenging ensuring ETL testing processes can be.

In order for ETL processes to be verified, significant testing must take place. This means making special considerations during the planning process. Ultimately, the goal is to ensure that data is stringently maintained without being transformed incorrectly, or even worse, going missing altogether. The following five tips can lead to better ETL testing:

- Begin by considering whether all of the expected data was actually loaded from the source file. Without conducting a full inventory count, you simply have no way of knowing whether the correct records made it into the correct landing area or into the data warehouse.

- You need to make certain you don’t have sets of duplicate data, which can easily skew aggregate reports. Duplication of data can often occur as the result of defects within the ETL process. Without checking, you simply have no way of knowing that duplicate data is moving across the various stages into the data warehouse until it is too late.

- Are all of the data transformation rules working correctly? One of the biggest challenges with ETL testing is that data can be received in a variety of formats. Among the goals of the ETL testing process is to render data so that it can be stored in a consistent manner. That cannot happen if the data transformation rules are not working as they should.

- Consider whether all of your data is being processed. If your system is not generating error logs, you may have no way of investigating this process. It is vital to ensure that the system you have in place is robust enough to handle everything from a power outage to a system crash.

- Finally, think about whether the system you have is scalable. While it might work fine for the amount of data you have currently incoming, remember that data warehousing is set to grow exponentially. This means your system might not be able to handle the amount of new data available in just a couple of years.

As you define your Risk-based Quality Assurance and ETL Testing strategies, try to see the forest through the trees. Nobody has a crystal ball, but the promise of Big Data gives us the power of predictive analysis – which is the closest we’ll come to the proverbial crystal ball. So, sit back and look at the broader picture of how your organization can implement these measures today. Be smart in how you approach the setup of your requirements management module in HP Quality Center, how data considerations affect those requirements, and most importantly, how you can work with your internal stakeholders to implement that strategy.

About ResultsPositive

ResultsPositive, award winning HP Partner of the Year for Sales Growth (2014), Customer Support (2013), PPM (2010, 2011, 2012), and Executive Scorecard (2012), was founded in 2004. ResultsPositive is a leader in IT Software consulting delivering Project & Portfolio Management, Application Transformation, Business Intelligence, Mobility, Application Lifecycle Management, IT Service Management, Business Service Management, Healthcare Transformation, & Cloud and Automation solutions across the entire HP IT Performance Suite for medium sized and Fortune 500 companies. As both an HP Platinum Partner and HP ASMP-S Support Provider, ResultsPositive has the experience, support, and training necessary to turn your complex IT processes into tangible business solutions.

For more information about our testing services, visit:

https://resultspositive.com/application-lifecycle/testing/

Subscribe for the latest RP Blog Updates: